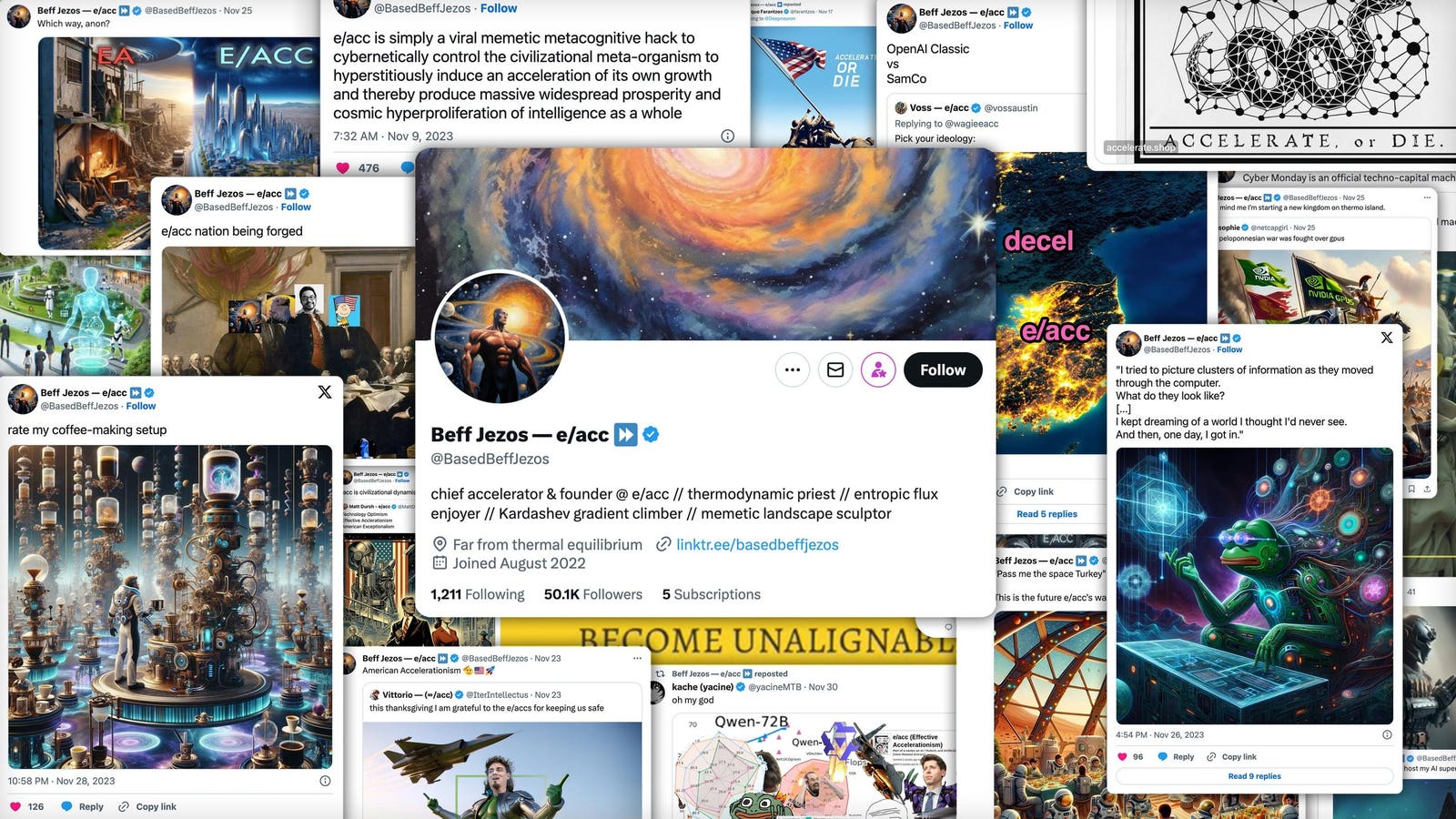

At various points, on Twitter, Jezos has defined effective accelerationism as “a memetic optimism virus,” “a meta-religion,” “a hypercognitive biohack,” “a form of spirituality,” and “not a cult.” …

When he’s not tweeting about e/acc, Verdon runs Extropic, which he started in 2022. Some of his startup capital came from a side NFT business, which he started while still working at Google’s moonshot lab X. The project began as an April Fools joke, but when it started making real money, he kept going: “It’s like it was meta-ironic and then became post-ironic.” …

On Twitter, Jezos described the company as an “AI Manhattan Project” and once quipped, “If you knew what I was building, you’d try to ban it.”

the wonderful thing about this story is the effort Forbes went to to dox a nazi

I spent way too much time arguing that NYT didn’t dox Slatescott.

I highly suspect the voice analysis thing was just to confirm what they already knew, otherwise it would have been like looking for a needle in a haystack.

People on twitter have been speculating that someone who knew him simply ratted him out.

i mean, probably. but also, nazis are just dogshit at opsec.

I still find it amusing that Siskind complained about being “doxxed” when he used his real first and middle name.

update: Verdon is now accusing another AI researcher of exposing him: https://twitter.com/GillVerd/status/1730796306535514472

Reading his timeline since the revelation is weird and creepy. It’s full of SV investors robotically pledging their money (and fealty) to his future efforts. If anyone still needs evidence that SV is a hive mind of distorted and dangerous group-think, this is it.

posting a screenshot to preserve the cringe in its most potent form:

yeah BasedBeffJezos is just an ironic fascist persona that has nothing to do with who I am, that’s why I’m gonna threaten anyone who associates me with BasedBeffJezos

Whoever said that all twitter bluechecks talk like anime villains was spot on

you might not like the consequences for exposing me as BasedEyesWHITEdragon yu-gi-boy!!!

Dude doxxed protest too much.

A whole lot of violent death threats in that thread.

Jezos has defined effective accelerationism as “a memetic optimism virus,” “a meta-religion,” “a hypercognitive biohack,” “a form of spirituality,” and “not a cult.”

“It’s like it was meta-ironic and then became post-ironic.”

Jezos described the company as an “AI Manhattan Project” and once quipped, “If you knew what I was building, you’d try to ban it.”

“Our goal is really to increase the scope and scale of civilization as measured in terms of its energy production and consumption,” he said. Of the Jezos persona, he said: “If you’re going to create an ideology in the time of social media, you’ve got to engineer it to be viral.”

Guillaume “BasedBeffJezos” Verdon appears, by all accounts, to be an utterly insufferable shithead with no redeeming qualities

“Our goal is really to increase the scope and scale of civilization as measured in terms of its energy production and consumption,” h

old and busted: paperclip maximizer

new hotness: entropy maximizer

He noted that Jezos doesn’t reflect his IRL personality. “The memetics and the sort-of bombastic personality, it’s what gets algorithmically amplified,” he said, but in real life, “I’m just a gentle Canadian.”

uwu im just a smollbean canadian

That not a cult quote strategically placed after all the cultish babble quotes mwah perfect journalism

Nerd rapture goofiness

HN discovers this article, almost a day later (laggards): https://news.ycombinator.com/item?id=38500192

A voice analysis conducted by Catalin Grigoras, Director of the National Center for Media Forensics, compared audio recordings of Jezos and talks given by Verdon

A particularly creepy doxxing by Forbes…

Oh no, are the tools developed by SV startups being used for stuff you don’t like? How sad HN.

I don’t want to libel the author by claiming the piece was planted by Beff himself, but what’s more likely, this writer who mostly covers what’s trending on tiktok gets a big scoop using voice recognition on a twitter space and then writes a glowing dossier? Or beff got on the phone with a publicist and conjured a big reveal with a softball interview all while namedropping his new startup?

extremely funny that they think this article makes him look good. also extremely funny that they think this is a big scoop

@sc_griffith @gerikson

Buried in that piece is the probable typo but certainly pointed “On X, the platform formally known as Twitter”

Oh no, are the tools developed by SV startups being used for stuff you don’t like? How sad HN.

tormenting the torment nexus. heh, how the turntables

former Google engineer.

Of course. At this point whenever I read something with the phrase former Google engineer I’m just gonna assume they’re doing something terrible.

q: how do you know if someone’s a former google engineer?

xoogler’s everywhere, a: AT GOOGLE WE USED TO HAVE A WAY TO…

In its reaction against both EA and AI safety advocates, e/acc also explicitly pays tribute to another longtime Silicon Valley idea. “This is very traditional libertarian right-wing hostility to regulation," said Benjamin Noys, a professor of critical theory at the University of Chichester and scholar of accelerationism. Jezos calls it the “libertarian e/acc path.”

At least the Italian futurists were up front about their agenda.

“We’re trying to solve culture by engineering,” Verdon said. “When you’re an entrepreneur, you engineer ways to incentivize certain behaviors via gradients and reward, and you can program a civilizational system."

Reading Nudge to engineer the ‘Volksschädling’ to board the trains voluntarily. Dusting off the old state eugenics compensation programs.

The fuck do they mean “solve culture”? Is culture a problem to be solved? Actually don’t answer that.

even more horrifying — they see culture as a system of equations they can use AI to generate solutions for, and the correct set of solutions will give them absolute control over culture. they apply this to all aspects of society. these assholes didn’t understand hitchhiker’s guide to the galaxy or any of the other sci fi they cribbed these ideas from, and it shows

The ultimate STEMlord misunderstanding of culture; something absolutely rife in the Silicon Valley tech-sphere.

These dudes wouldn’t recognize culture if unsafed its Browning and shot them in the kneecaps.

It’s like pickup artistry on a societal scale.

It really does illustrate the way they see culture not as, like, a beautiful evolving dynamic system that makes life worth living, but instead as a stupid game to be won or a nuisance getting in the way of their world domination efforts

remember that Yudkowsky’s CEV idea was literally to analytically solve ethics

In an essay that somehow manages to offhandendly mention both evolutionary psychology and hentai anime in the same paragraph.

It’s like when he wore a fedora and started talking about 4chan greentexts in his first major interview. He just cannot help himself.

P.S. The New York Times recently listed “internet philosopher” Eliezer Yudkowsky as one of the of the major figures in the modern AI movement, this is the picture they chose to use.

this is the picture they chose to use.

You may not like it, but this is what peak rationality looks like.

Don’t have to have Culture War when you can just systemically deploy the exact culture you want right from the comfort of your prompt, amirite?!

(This is a shitpost idea but it’s probably halfway accurate, maybe modulo the prompt (but there will definitely be someone also trying that))

use case for urbit found

The best use case for Urbit is marking its proponents as first up against the wall when the revolution comes.