i’m trying to setup nginx to run as a proxy to aggregate multiple services. running on different ports on the server, using nginx to let me connect to all the services by going to a specific subdirectory. so i can keep only one port open in the router between my lab and the main house network.

i’m using the following config file from an example i found to do this, with a landing page to let me get to the other services:

used config file

server { listen 80; server_name 10.0.0.114; # Replace with your domain or IP

# Redirect HTTP to HTTPS

return 301 https://$host$request_uri;

}

server { listen 1403 ssl; # Listen on port 443 for HTTPS server_name 10.0.0.114; # Replace with your domain or IP

ssl_certificate /certs/cert.pem; # Path to your SSL certificate

ssl_certificate_key /certs/key.pem; # Path to your SSL certificate key

location / {

root /var/www/html; # Path to the directory containing your HTML file

index index.html; # Default file to serve

}

location /transbt {

#configuration for transmission

proxy_pass http://10.89.0.3:9091/;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;$proxy_add_x_forwarded_for;

}

but the problem i’m having is that, while nginx does redirect to transmission’s login prompt just fine, after logging in it tries to redirect me to 10.0.0.114:1403/transmission/web instead of remaining in 10.0.0.114:1403/transbt and breaks the page. i’ve found a configuration file that should work, but it manually redirects each subdirectory transmission tries to use, and adds proxy_pass_header X-Transmission-Session-Id; which i’m not sure what’s accomplishing: github gist

is there a way to do it without needing to declare it explicitly for each subdirectory? especially since i need to setup other services, and i doubt i’ll find config files for those as well it’s my first time setting up nginx, and i haven’t been able to find anything to make it work.

Edit: I forgot to mention. The server is still inside of a nat. It’s not reachable by the outside. The SSL certificate is self signed and it’s just a piece of mind because a lot of things connect to the home net. And none of the services I plan to use only support http.

For subdirectories it’s more complex as each application needs to be configured properly too, so you’ll need to go into transmission and tell it what subdirectory you’re using.

Or switch to subdomains as that just works without any extra config, and still let’s you use one port for everything.

Not all services/apps work well with subdirectories through a reverse proxy.

Some services/apps have a config option to add a prefix to all paths on their side to help with it, some others don’t have any config and always expect paths after the domain to not be changed.

But if you need to do some kind of path rewrite only on the reverse proxy side to add/change a segment of the path, there can be issues if all path changes are not going through the proxy.

In your case, transmission internally doesn’t know about the subdirectory, so even if you can get to the index/login from your first page load, when the app itself changes paths it redirects you to a path without the subdirectory.

Another example of this is with PWAs that when you click a link that should change the path, don’t reload the page (the action that would force a load that goes through the reverse proxy and that way trigger the rewrite), but instead use JavaScript to rewrite the path text locally and do DOM manipulation without triggering a page load.

To be honest, the best way out of this headache is to use subdomains instead of subdirectories, it is the standard used these days to avoid the need to do path rewrite magic that doesn’t work in a bunch of situations.

Yes, it could be annoying to handle SSL certificates if you don’t want or can’t issue wildcard certificates, but if you can do a cert with both

maindomain.tldand*.maindomain.tldthen you don’t need to touch that anymore and can use the same certificate for any service/app you could want to host behind the reverse proxy.Would agree, don’t use sub paths.

Subdomains is the way, with an SSL certificate for each domain if you can.

I’m sorry. I forgot to mention it in the post. But the server is not facing the outside. It’s just behind an extra nat to keep my computers separate from the rest of the home. There’s no domain name linking to it. I’m not sure if that impacts using subomains.

The SSL certificates shouldn’t be a problem since it’s just a self signed certificate, I’m just using SSL as a peace of mind thing.

I’m sorry if I’m not making sense. It’s the first time I’m working with webservers. And I genuinely have no idea of what I’m doing. Hell. The whole project has basically been a baptism by fire, since it’s my first proper server.

Should not be an issue to have everything internally, you can setup a local DNS resolver, and config the device that handles your DHCP (router or other) to set that as the default/primary DNS for any devices on your network.

To give you some options if you want to investigate, there is: dnsmasq, Technitium, Pi-Hole, Adguard Home. They can resolve external DNS queries, and also do domain rewrite/redirection to handle your internal only domain and redirect to the device with your reverse proxy.

That way, you can have a local domain like

domain.lanordomain.internalthat only works and is managed on your Internal network. And can use subdomains as well.I’m sorry if I’m not making sense. It’s the first time I’m working with webservers. And I genuinely have no idea of what I’m doing. Hell. The whole project has basically been a baptism by fire, since it’s my first proper server.

Don’t worry, we all started almost the same, and gradually learned more and more, if you have any questions a place like this is exactly for that, just ask.

I’ll need to check. I doubt I’ll be able to setup a DNS resolver. Since I can’t risk the whole network going down if the DNS resolver fails. Plus the server will have limited exposure to the home net via the other router.

Still. Thanks for the tips. I’ll update the post with the solution once I figure it out.

Most routers, or devices, let you set up at least a primary and secondary DNS resolver (some let you add more), so you could have your local one as primary and an external one like google or Cloudflare as secondary. That way, if your local DNS resolver is down, it will directly go and query the external one, and still resolve them.

Still. Thanks for the tips. I’ll update the post with the solution once I figure it out.

You are welcome.

Also, some routers allow to add local dns entires within their config

Subpaths are things of the past (kinda) ! SSL wildcards are going to be a life saver in your homelab !

I have a self-signed rootCA + intermediateCA which are signing all my certificates for my services. But wait… It can get easier just put a wildcard domain for your homelab (*.home.lab) and access all your services in your lan with a DNS provider (pihole will be your friend!).

Here is an very simplified example:

-

Create a rootCA (certificate authority) and put that on every device (Pc, laptop, android, iphone, tv, box…)

-

Sign a server certificate with that rootCA for the following wildcard domaine: *.home.lab and put that behind a reverse proxy.

-

Add pihole as DNS resolver for your local domain name (*.home.lab) or if you like you can manually add the routes on all devices… But that"s also a thing of the past !

-

Let your proxy handle your services

Access all your services with the following url in your lan

This works flawlessly without the need to pay for any domain name, everything is local and managed by yourself. However, it’s not that easy as stated above… OpenSSL and TLS certificates are a beast to tame and lots of reading ^^ so does Ngnix or any other reverse proxy !

But as soon as you get the hang of it… You can add a new services in seconds :) (specially with docker containers !)

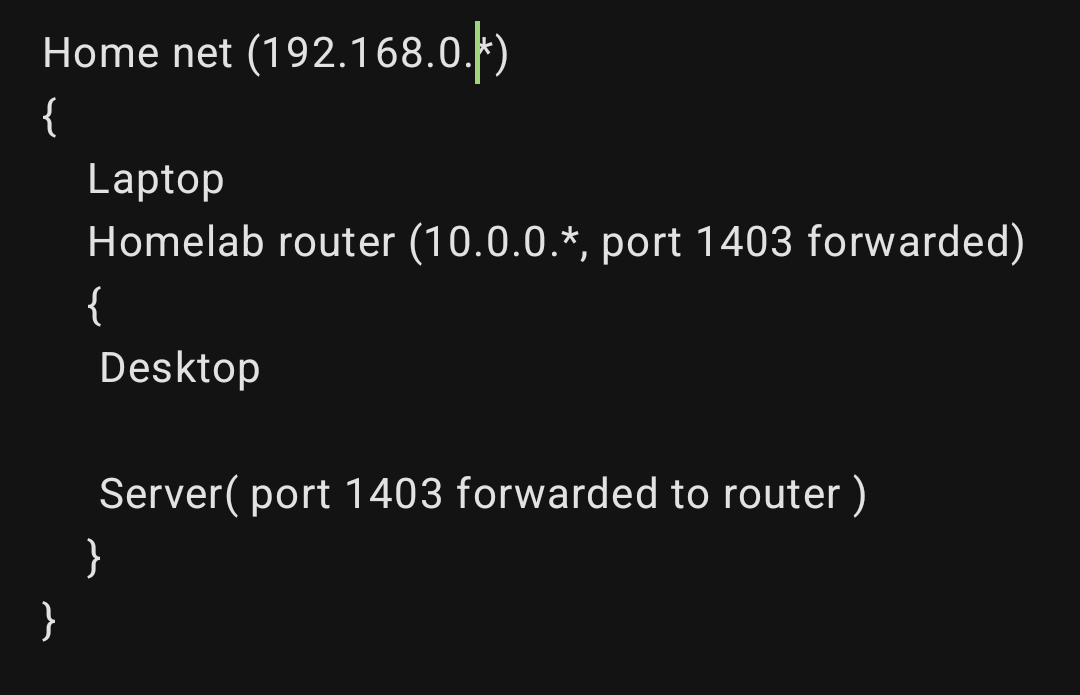

I think that pihole would be the best option. But coming to think of it… I think that to make it work I’d need two instances of pihole. Since the server is basically straddling two nats. With the inner router port forwarding port 1403 from the server. Basically:

To let me access the services both from the desktop and the laptop. I’d need to have two DNS resolvers, since for the laptop it needs to resolve to the 192.168.0.* address of the homelab router. While for the desktop it needs to resolve directly to the 10.0.0.* address of the server.

Also, little question. If I do manage to set it up with subdomains. Will all the traffic still go through port 1403? Since the main reason I wanted to setup a proxy was to not turn the homelab’s router into Swiss cheese.

… The rootCA idea though is pretty good… At least I won’t have Firefox nagging me every time I try to access it.

(specially with docker containers !)

Already on it! I’ve made a custom skeleton container image using podman, that when started. It runs a shell script that I customize for each service, while another script gets called via podman exec for all of them by a cronjob to update them. Plus they are all connected to a podman network with manually assigned IPs to let them talk to eachother. Not how you’re supposed to use containers. But hey, it works. Add to that a btrfs cluster, data set to single, metadata set to raid1. So I can lose a disk without losing all of the data. ( they are scrap drives. Storage is prohibitively expensive here) + transparent compression; + cronjob for scrub and decuplication.

I manage with most of the server. But web stuff just locks me up. :'-)

Sorry I didn’t respond earlier :S !

To let me access the services both from the desktop and the laptop. I’d need to have two DNS resolvers, since for the laptop it needs to resolve to the 192.168.0.* address of the homelab router. While for the desktop it needs to resolve directly to the 10.0.0.* address of the server.

I’m not entirely sure if I get what you mean here. If you have a central DNS resolver like pihole In your LAN it can resolve to whatever is there. I have a pihole which resolve to itself (can access it as pihole.home.lab) and resolves to my server’s reverse proxy, which handles all the port shenanigan and services hosted on my server. I think I can try to make a diagram to show how it works in my LAN right now, not sure if this can be helpful by any mean, but this would allow me to have a more visual feedback of my own LAN setup :P. However, I do use Traefik as my reverse proxy for my docker containers, so I won’t apply to nginx and I’m not sure if this is possible (It probably is, but nginx is a mystery for me xD)

Also, little question. If I do manage to set it up with subdomains. Will all the traffic still go through port 1403? Since the main reason I wanted to setup a proxy was to not turn the homelab’s router into Swiss cheese.

Your proxy should handle all the port things. Your proxy listens to all :80 :443 Incoming traffic and “routes” to the corresponding service and it’s ports.

While I do have my self-learned self-hosted knowledge, I’m not an IT guy, so I may be mistaken here and there. However, I can give you a diagram on How it works on my setup right now and also gift you a nice ebook to help you setup your mini-CA for your lan :)

Don’t worry. Lemmy is asynchronous after all. Instant responses aren’t expected. Plus. I know life gets in the way :-).

It was basically a misconception I had about how the homelab router would route the connection

Basically with pihole set up. It routes servo.internal to 192.168.1.y, the IP of the homelab router. So when a machine from the inside of the homelab. On 10.0.0.*, connects to the server. It will refer to it via the 192.168.1.y IP of the router.

The misconception was that I thought all the traffic was going to bounce between the homelab router and the home router. Going through the horrendously slow LAN cable that connects them and crippling the bandwidth between 10.0.0.* machines and the server.

I wanted to setup another pihole server for inside of the homelab. So it would directly connect to the server on it’s 10.0.0.* address instead of the 192.168.1.y. And not go and bounce needlessly between the two routers.

But apparently the homelab router realizes he’s speaking to itself. And routes the data directly to the server. Without passing though the home router and the slower Ethernet. So the issue is nonexistent, and I can use one pihole instance with 192.168.1.y for the server without issue. (Thanks to darkan15 for explaining that).

While I do have my self-learned self-hosted knowledge, I’m not an IT guy, so I may be mistaken here and there.

I think most of us are in a similar situation. Hell. I weld for a living atm :-P.

However, I can give you a diagram on How it works on my setup right now and also gift you a nice ebook to help you setup your mini-CA for your lan :

The diagram would be useful. Considering that rn I’m losing my mind between man pages.

As for the book… I can’t accept. Just give me the name/ISBN and I’ll provide myself. Still. Thanks for the offer.

(Thanks to darkan15 for explaining that).

I have to look at his answer to have a better understanding :P

The diagram would be useful. Considering that rn I’m losing my mind between man pages.

I’m working on it right now :) I’m a bit overwhelmed with my own LAN setup, and trying to get some feedback from other users :P

As for the book… I can’t accept. Just give me the name/ISBN and I’ll provide myself. Still. Thanks for the offer.

Good. If you have the money to spare please pay for it otherwise you know the drill :) (Myself I’m not able to pay the author so it’s kinda hypocrite on my end… But doing some publicity is also some kind of help I guess?)

Demystifying Cryptography with OpenSSL 3 . 0 by Alexei Khlebnikov <packt>

ISBN: 978-1-80056-034-5

It’s very well written, even as a non-native it was easy to follow :). However, let me give you something along the road, something that will save you hours of looking around the web :) !

Part 5, Chapter 12: Running a mini-CA is the part you’re interested in and that’s the part I used to create my server certificates.

HOWEVER: When he generates the private keys, he uses the

ED448 algorithm, which is not going to work for SSL certificates because not a single browser accepts them right now (same thing goes for Curve25519). Long story short, If you don’t want to depend on NIST curves (NSA) fall back to RSA in your homelab ! If you are interested in that story go top123:Brainpool curves are proposed by the Brainpool workgroup, a group of cryptographers that were dissatisfied with NIST curves because **NIST curves were not verifiably randomly generated, so they may have intentionally or accidentally weak security. **

Here is a working example for your certificates:

Book:

$ mkdir private $ chmod 0700 private $ openssl genpkey \ -algorithm ED448 \ -out private/root_keypair.pemBut should be:

$ mkdir private $ chmod 0700 private $ openssl genpkey \ -algorithm RSA \ -out private/root_keypair.pemYou have to use RSA or whatever curve you prefer but accepted by your browser for EVERY key you generate !

Other than that, it’s a great reading book :) And good study material for cryptography introduction !

i’m not sure if it’s equivalent. but in the meantime i have cobbled up a series of commands from various forums to do the whole process, and i came up with the following openssl commands.

openssl genrsa -out servorootCA.key 4096 openssl req -x509 -new -nodes -key servorootCA.key -sha256 -days 3650 -out servorootCA.pem openssl genrsa -out star.servo.internal.key 4096 openssl req -new -key star.servo.internal.key -out star.servo.internal.csr openssl x509 -req -in star.servo.internal.csr -CA servorootCA.pem -CAkey servorootCA.key -CAcreateserial -out star.servo.internal.crt -days 3650 -sha256 -extfile openssl.cnf -extensions v3_reqwith only the crt and key files on the server, while the rest is on a usb stick for keeping them out of the way.

hopefully it’s the same. though i’ll still go through the book out of curiosity… and come to think of it. i do also need to setup calibre :-).

thanks for everything. i’ll have to update the post with the full solution after i’m done, since it turned out to be a lot more messy than anticipated…

Do yourself a favor and use the default ports for

HTTP(80),HTTPS(443)orDNS(53), you are not port forwarding to the internet, so there should be no issues.That way, you can do URLs like

https://app1.home.internal/andhttps://app2.home.internal/without having to add ports on anything outside the reverse proxy.From what you have described your hardware is connected something like this:

Internet -> Router A (

192.168.0.1) -> Laptop (192.168.0.x), Router B (192.168.0.yYou could run only one DNS on the laptop (or another device) connected to

Router Aand point the domain toRouter B, redirect for example the domainhome.internal(recommend<something>.internalas it is the intended one to use by convention), to the192.168.0.yIP, and it will redirect all devices to the server by port forwarding.If

Router Bhas Port Forwarding of Ports80and443to the Server10.0.0.114all the request are going to reach, no matter the LAN they are from. The devices connected toRouter Awill reach the server thanks to port forwarding, and the devices onRouter Bcan reach anything connected toRouter ANetwork192.168.0.*, they will make an extra hop but still reach.Both routers would have to point the primary DNS to the Laptop IP

192.168.0.x(should be a static IP), and secondary to either Cloudflare1.1.1.1or Google8.8.8.8.That setup would be dependent on having the laptop (or another device) always turned ON and connected to

Router Anetwork to have that DNS working.You could run a second DNS on the server for only the

10.0.0.*LAN, but that would not be reachable fromRouter Aor the Laptop, or any device on that outer LAN, only for devices directly connected toRouter B, and the only change would be to change the primary DNS onRouter Bto the Server IP10.0.0.114to use that secondary local DNS as primary.Lots of information, be sure to read slowly and separate steps to handle them one by one, but this should be the final setup, considering the information you have given.

You should be able to setup the certificates and the reverse proxy using subdomains without much trouble, only using IP:PORT on the reverse proxy.

I think I’ll do this with one modification. I’ll make nginx serve the landing page with the subdomains when computers from router A try to access. ( by telling nginx to serve the page with the subdomains when contacted by 10.0.0.1) while I’ll serve another landing page that bypasses the proxy, by giving the direct 10.0.0.* IP of the server with the port, for computers inside router B .

Mostly since the Ethernet between router a and b is old. And limits transfers to 10Mbps. So I’d be handicapping computers inside router B by looping back. Especially since everything inside router B is supposed to be safe. And they’ll be the ones transferring most of the data to it.

Thanks for the breakdown. It genuinely helped in understanding the Daedalus-worthy path the connections need to take. I’ll update the post with my final solution and config once I make it work.

If you decide on doing the secondary local DNS on the server on

Router Bnetwork, there is no need to loop back, as that DNS will maintain domain lookup and the requests on10.0.0.xall internal toRouter Bnetwork.On

Router Bthen you would have as primary DNS the Server IP, and as secondary an external one like Cloudflare or Google.You can still decide to put rules on the reverse proxy if the origin IP is from

192.168.0.*or10.0.0.*if you see the need to differentiate traffic, but I think that is not necessary.I think I didn’t explain myself the right way.

Computers from inside of

Router Bwill access the server via it’s IP. Nginx will only serve an HTML file with the links for them. Basically acting as a bookmark page for the IP:port combos. While anything fromRouter Awill receive a landing page that has the subdomains, that will be resolved by pihole (exposed to the machines onRouter Aas an open port onrouter b) and will make them pass through the proxy.So basically the DNS will only be used on machines from

Router A, and the rules on nginx are just to give links to the reverse proxy if the machine is fromrouter A(I.e. the connection is coming from 10.0.0.1 from the server’s POV, or maybe the server name in the request. I’ll have to mess with nginx), or the page with the raw IP of the server+ port of the service if coming fromRouter B.router Ais Unfortunately junk from my ISP, and it doesn’t allow me to change the DNS. So I’ll just addRouter B( and thus, the pihole instance that’s on the server) as a primary dns, and an external one as a secondary DNS as fallback.If you decide on doing the secondary local DNS on the server on

Router Bnetwork, there is no need to loop back, as that DNS will maintain domain lookup and the requests on10.0.0.xall internal toRouter BnetworkWouldn’t this link to the

192.168.0.yaddress ofrouter Bpass throughrouter A, and loop back torouter B, routing through the slower cable? Or is the router smart enough to realize he’s just talking to itself and just cut out `router A from the traffic?

-

Consider using Caddy. It is much simpler to setup and all the required headers get set automatically.

Seems like something you may need to change in Transmission’s configuration, somewhere. Because it’s Transmission that is redirecting you and what you want is for that redirect to include the uri prefix.

Otherwise, I see two options:

- use a regex location block and match against all expected patterns

- use a dedicated subdomain (i.e: server block) for this (each) service you want to proxy and just proxy all traffic to that domain with a root location block.

The latter is what I would do.

If I remember correctly there was an option for that. I need to dig up the manual…

Still, I think I’m going to need to change approach. Eventually one of the other services will bite me if I keep using subdirectories.